The big event of June was the release of a beta streaming version of Gruss. As it is in development, the Gruss guys requested feedback and there were a number of bugs highlighted by users. We are now on the sixth release, which is good to know the program is being worked on and improved. The bug that I found related to moving on from suspended markets but after I fed back via the forum, a fix was quickly released. It took a little time for me to grasp the full effects of streaming. At first I thought the refresh was poor as updates were very random. The stream only updates when there is something to update, ie market activity, and doesn’t waste bandwidth by refreshing the same data repeatedly, as before streaming. This gives a refresh chart that can have quite large gaps between updates, especially on markets with some time to go before off. Now, with only a few minutes to off, the refreshes come in more than every 200ms. As there are no requests for price data, there’s no added delay. To note, the charts on the VPS are showing lower times than those at home. The request delay will still be relevant when placing orders in the market but no data is available on what it is.

Another major event, for me, was the changes to the Aus turnover eligibility. I posted about this here. I’m on with the coding around this. I have a section of code that only runs once when a market is selected and then isn’t run every refresh. I’m adding the NSW code there, which was straight forward for checking against the list of courses but tracking the traded back bets over a week is a little more complicated.

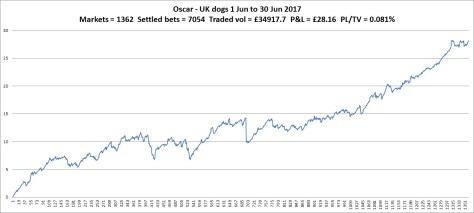

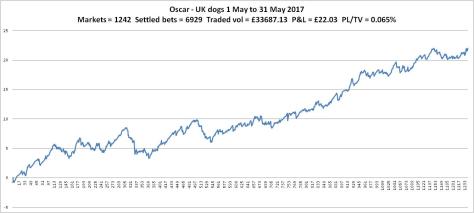

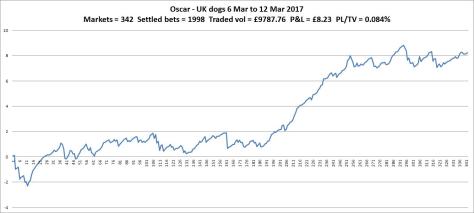

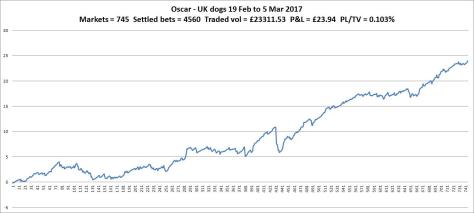

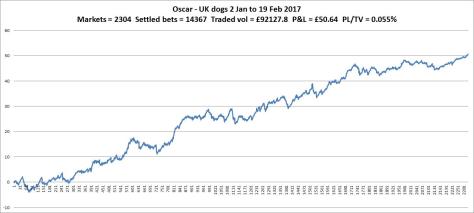

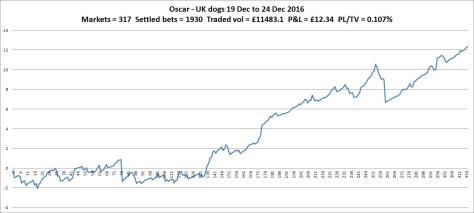

The UK dogs have done good this month. I’m considering adjusting the stake range to allow higher bets. I want to trial it on specific markets first. I’m thinking of those that are televised and tend to have much higher activity.

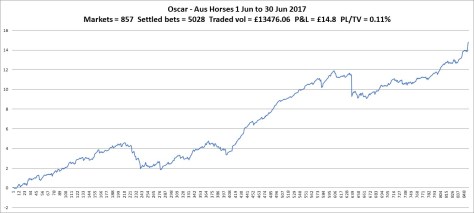

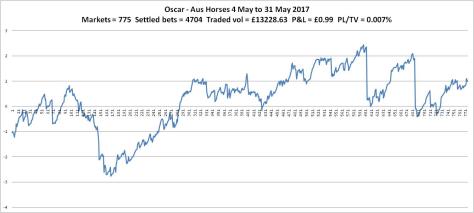

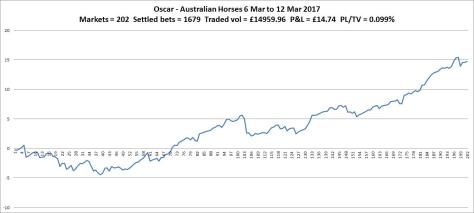

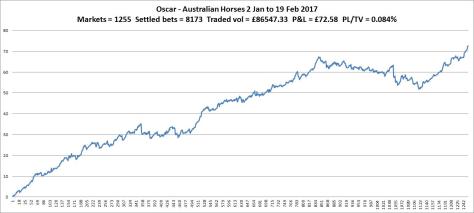

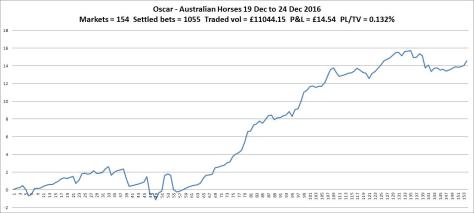

An improved chart from the Aus horses compared to recent months. It’s nice to see a good return as I was beginning to lose patience with it and was considering stopping this bot. It’s been a long time since I ran Oscar on the UK horses as there was no value in it for me and I was thinking the Aus horses were going the same way. They still might, to be fair. But for now, with this, it will continue.

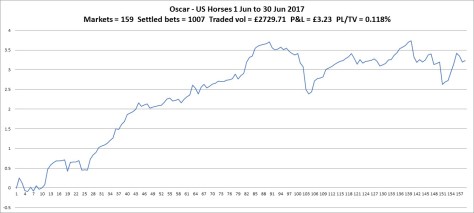

US horses – better result than not trading them at all. There are some really well funded races that I’m missing, purely down to start times been well off. I’ll continue moaning about this point until I finally get a solution in place (I’ve had some good suggestions from you but the code don’t write itself, I should get on with it).

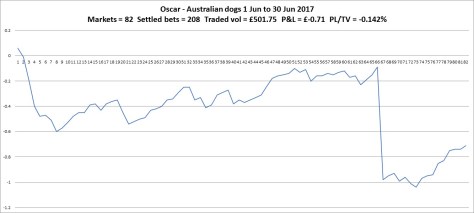

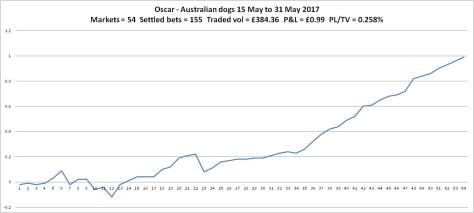

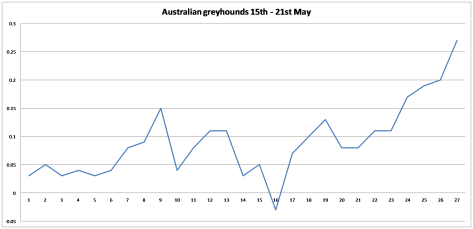

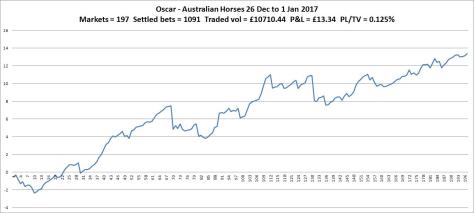

Definitely lower activity on the dish-lickers. An unfortunate loss keeping the return in the negative. Not much harm in continuing for now, I consider this my experimental contribution (why not?)

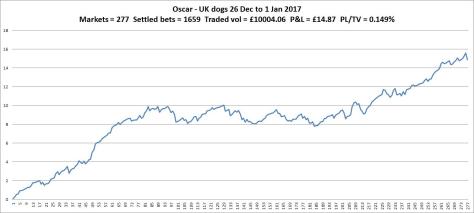

Great 2nd week there. Need to think about handling the bf crash scenario when in autopilot. I don’t think it would be a disaster if not about but does create some extra risk.

Thanks. For me the crashes can be a bit annoying. Oscar backs first so the greatest loss is the stake, assuming a clean cut crash. If you’re laying first the exposed risk between entry and exit is far greater, add multi-runner trading and that increases, something to consider when setting up a bot.