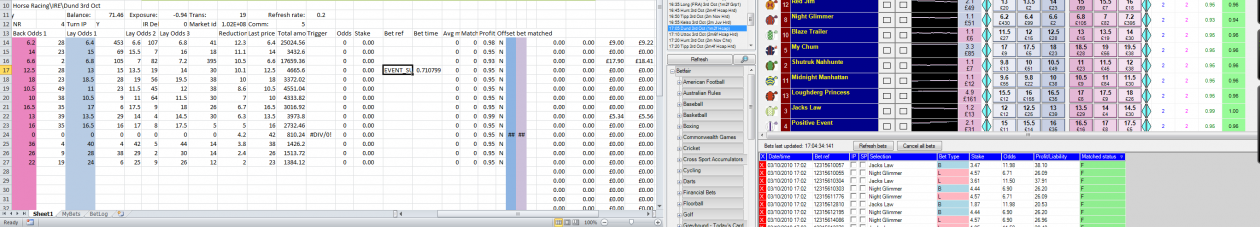

I spent as much time as I could working on the data collection idea but it wasn’t as straight forward as I’d hoped. Not because of the coding, it was the working out what I wanted to do bit that exercised my mind. So collecting data in an array and then storing (printing to an excel sheet) selected parts from around trades is something I can do. But the amount of data is quite large. For instance, if I want to store data from 10 seconds before a trade to 10 seconds after, refreshing at 0.2 secs, will give 100 data sets. Sometimes the out trade can happen 30 seconds or more later – 200+ data sets. And to have a full view of the market, that’s 1200 rows (if printing one line to a row) for UK dogs (6 runners). Some events can have 40 or more bets placed so thousands of rows for one race.

The next thing I thought was “what am I actually going to do with this data?” Well, perform calculations on it to give indications of movement, highs, lows and quantities prior/post trade, I guess. Here’s my solution – collect data in array; perform calculations on data in array; only print out results to sheet. Cracked it. But what calculations? Oh dear, I’m down the road of calculus, a subject I’ve worked with in the past and something I should have remembered more of than I have. I am enjoying this, not despite the challenge but because of the challenge. May take longer than a few evenings.

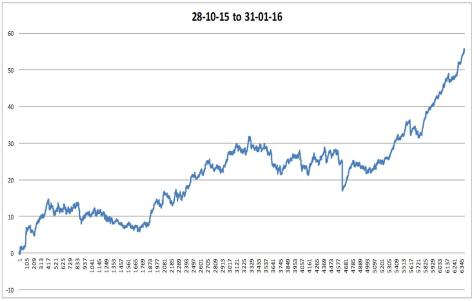

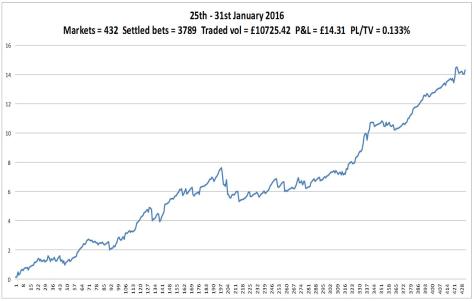

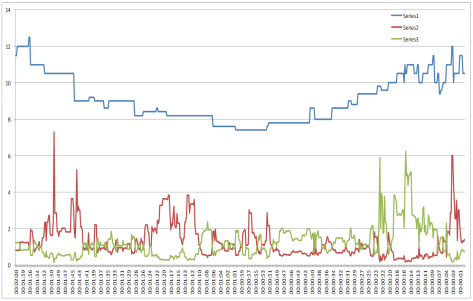

On to the charts. UK dogs not doing too bad until a big loss. This started as a rogue lay bet placed by the software, no explanation why. My bot has three goes at trading out before the off with more unfavourable odds each attempt. The third attempt went unmatched at market suspension, leaving me exposed on one runner, now known as the winner. This is a steamroller event, however as it didn’t wipe my bank, I’m thinking of it more as a wacker plate event. These things happen. Occurring on Saturday afternoon, this went unnoticed by me until the evening, so trading had stopped. I looked into it but forgot to restart the link and then missed AUS trading on Sunday. Fixed this Sunday and have now set UK dogs to AUS settings to see what happens.

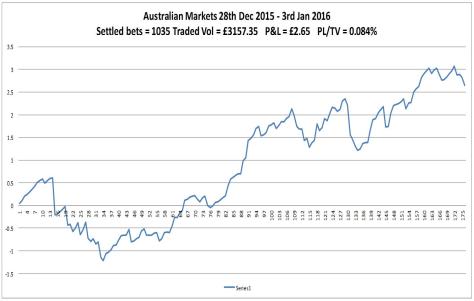

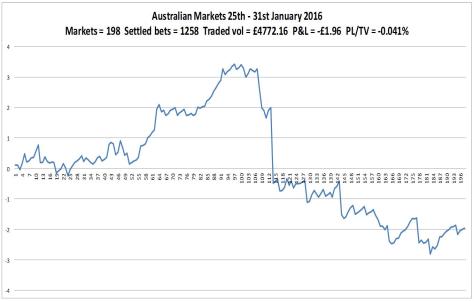

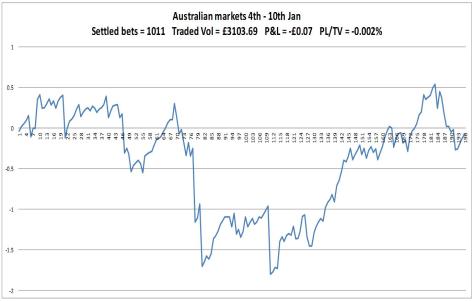

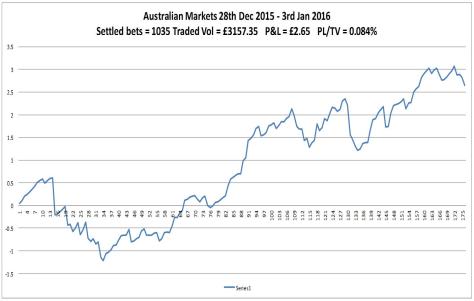

AUS chart is on trend with previous weeks, the increased activity is probably due to Christmas.